A cross-Canada team of researchers have brought quantum and generative AI together to prepare for the Large Hadron Collider’s next upgrade.

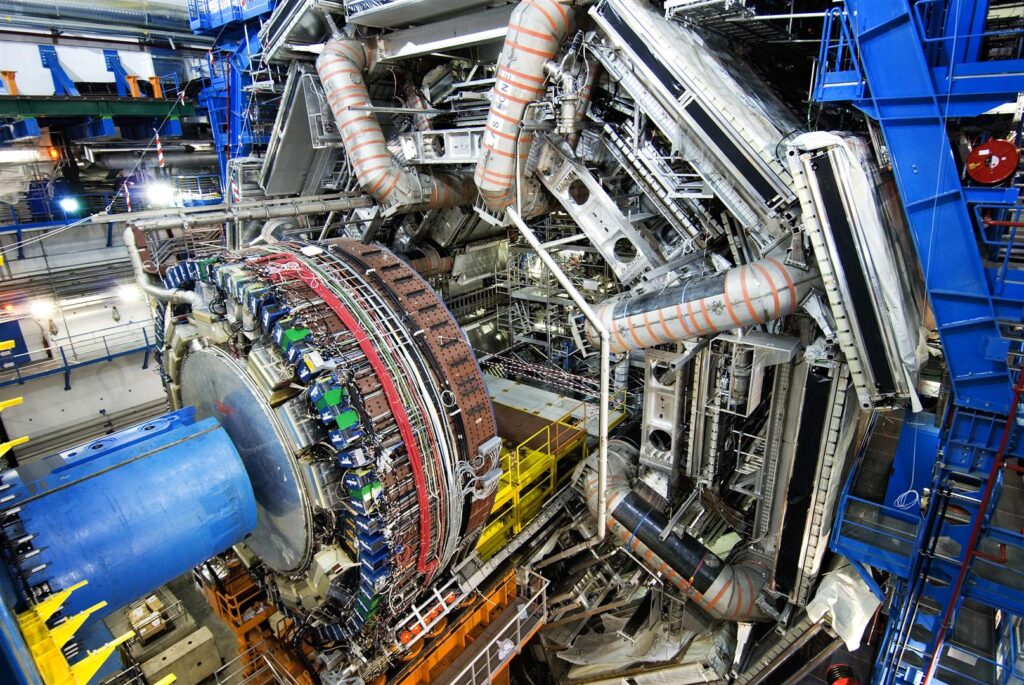

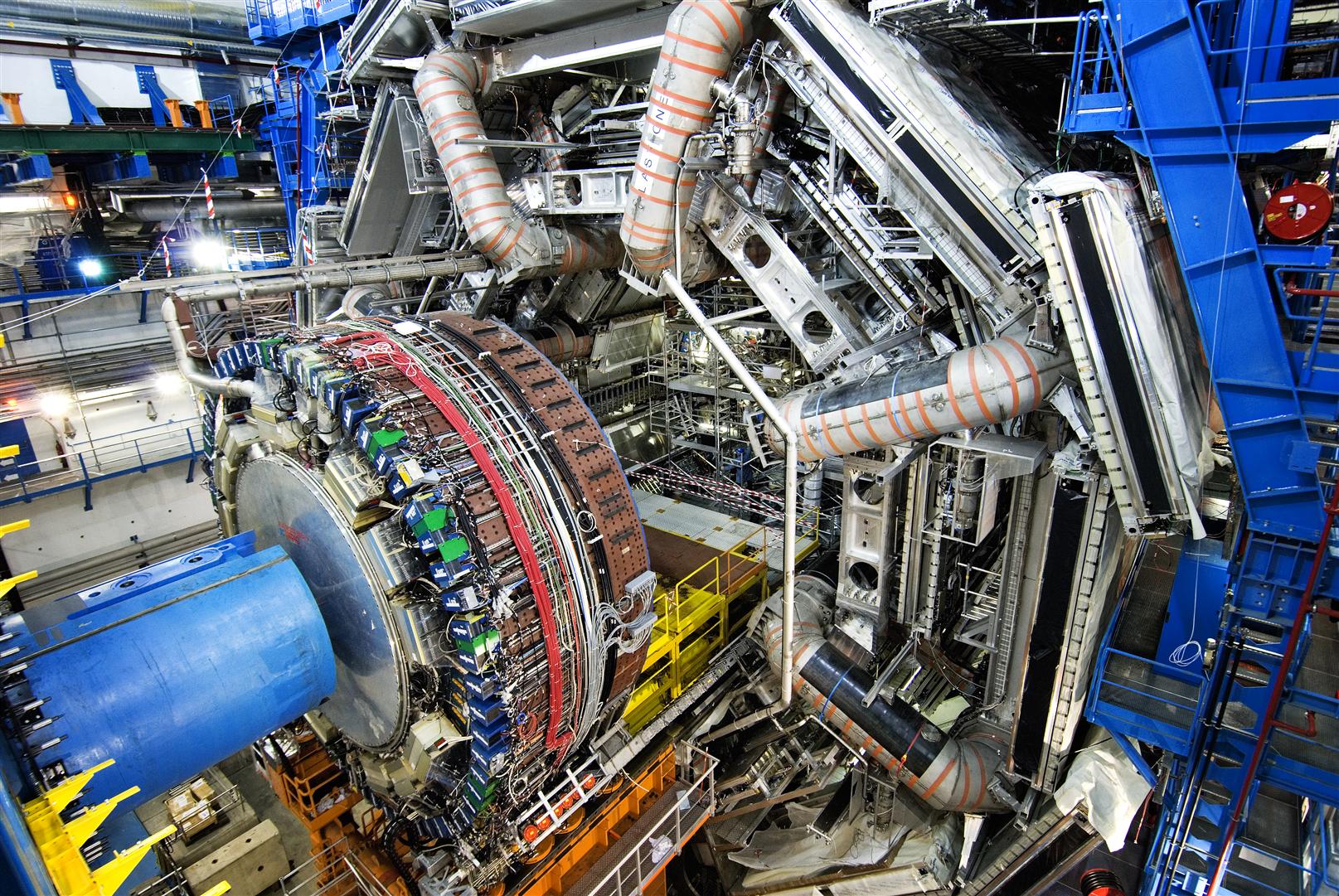

In the world of collider physics, simulations play a key role in analyzing data from particle accelerators. Now, a cross-Canada effort is combining quantum with generative AI to create novel simulation models for the next big upgrade of the Large Hadron Collider (LHC) – the world’s largest particle accelerator.

In a paper published in npj Quantum Information, a team that includes researchers from TRIUMF, Perimeter Institute, and the National Research Council of Canada (NRC) are the first to use annealing quantum computing and deep generative AI to create simulations that are fast, accurate, and computationally efficient. If the models continue to improve, they could represent a new way to create synthetic data to help with analysis in particle collisions

Why simulations are essential for collider physics

Simulations broadly assist collider physics researchers in two ways. First, researchers use them to statistically match observed data to theoretical models. Second, scientists use simulated data to help optimize the design of the data analysis, for instance by isolating the signal they are studying from irrelevant background events.

“To do the data analysis at the LHC, you need to create copious amounts of simulations of collision events,” explains Wojciech Fedorko, one of the principal investigators on the paper and Deputy Department Head, Scientific Computing at TRIUMF, Canada’s particle accelerator centre in Vancouver. “Basically, you take your hypothesis, and you simulate it under multiple scenarios. One of those scenarios will statistically best match the real data that has been produced in the real experiment.”

Currently, the LHC is preparing for a major shutdown in anticipation of its high luminosity upgrade. When it comes back online, it will require more complex simulations that are reliably accurate, fast to produce, and computationally efficient. Those requirements have the potential to create a bottleneck, as the computational power required to create these simulations will no longer be feasible.

“Simulations are projected to cost millions of CPU years annually when the high luminosity LHC turns on,” says Javier Toledo-Marín, a researcher scientist jointly appointed at Perimeter Institute and TRIUMF. “It’s financially and environmentally unsustainable to keep doing business as usual.”

Javier Toledo-Marín, researcher at Perimeter Institute and TRIUMF, co-led the development of the novel quantum-AI simulation framework.

When quantum and generative AI collide

Particle physicists use specialized detectors called calorimeters to measure the energy released by the showers of particles that result from collisions. Scientists combine the readings from these and other detectors to piece together what happened at the initial collision. It’s through this process of comparing simulations to experimental data that researchers discovered the Higgs boson at the Large Hadron Collider in 2012. Compared to the other sub-detector systems within the LHC experiments, calorimeters and the data they produce are the most computationally intensive to simulate, and as such they represent a major opportunity for efficiency gains.

In 2022, a scientific “challenge” was issued by researchers seeking to spur rapid advances in calorimeter computations, in an attempt to address the coming computational bottleneck at the LHC. Named the “CaloChallenge,” the challenge provided datasets based on LHC experiments for teams to develop and benchmark simulations of calorimeter readings. Fedorko and the team are the only ones so far to take a full-scale quantum approach, thanks to an assist from D-Wave Quantum Inc.’s annealing quantum computing technology.

Annealing quantum computing is a process that is usually used to find the lowest-energy state for a system or a state near to the lowest energy one, which is useful for problems involving optimization.

After discussing with D-Wave, Fedorko, Toledo-Marín, and the rest of the team determined that D-Wave’s annealing quantum computers could be used for simulation generation. You just need to use annealing to manipulate qubits (the smallest bits of quantum information) in an unconventional way.

“In the D-Wave quantum processor, there is a mechanism that ensures the ratio between the ‘bias’ on a given qubit and the ‘weight’ linking it to another qubit is the same throughout the annealing process. With the help of D-Wave, the team realized that they could use this mechanism to instead guarantee outcomes for a subset of the qubits on a device. “We basically hijacked that mechanism to fix in place some of the spins,” says Fedorko. “This mechanism can be used to ‘condition’ the processor – for example, generate showers with specific desired properties – like the energy of a particle impinging on the calorimeter.”

The end result: an unconventional way to use annealing quantum computing to generate high-quality synthetic data for analyzing particle collisions

The next phase of collider physics simulations

The published result is important because of its performance in three metrics: the speed to generate the simulations, their accuracy, and how much computational resources they require. “For speed, we are in the top bound of results published by other teams and our accuracy is above average,” Toledo-Marín says. “What makes our framework competitive is really the unique combination of several factors – speed, accuracy, and energy consumption.”

Essentially, many types of quantum processing units (QPU) must be kept at an extremely low temperature. But giving it multiple tasks doesn’t significantly impact its energy requirements. A standard graphics processing unit (GPU), by contrast, will increase its energy use for each job it receives. As advanced GPUs become more and more power-hungry, QPUs by contrast can potentially scale up without leading to increasing computational energy requirements.

Looking forward, the team is excited to test their models on new incoming data so they can finetune their models, increasing both speed and accuracy. If all goes well, annealing quantum computing could become an essential aspect of generating simulations.

“It’s a good example of being able to scale something in the field of quantum machine learning to something practical that can potentially be deployed,” says Toledo-Marín.

The authors are grateful for the support of their many funders and contributors, which include the University of British Columbia, the University of Virginia, the NRC, D-Wave, and MITACS.